- Selecting a server in well-known countries may be free, but there may be some extra fees for the “exotic” locations (South Africa, UAE, Singapore and many more). Slots: 10, 50. The number of slots you select for your ARK server is going to limit the number of people that are going to join.

- No free slots on server - bug? No free slots on server - bug? Me and my friend are on LAN, and he invited me through Steam. I got the invite, accepted it and it says: no free slots on server. Is there any thing I can do to fix this? I've searched everywhere.

No free slots on server - bug? No free slots on server - bug? Me and my friend are on LAN, and he invited me through Steam. I got the invite, accepted it and it says: no free slots on server. Is there any thing I can do to fix this? I've searched everywhere.

Dell PowerEdge VRTX is a computer hardware product line from Dell.[1] It is a mini-blade chassis with built-in storage system. The VRTX comes in two models: a 19' rack version that is 5 rack units high or as a stand-alone tower system.[2]

Specifications[edit]

The VRTX system is partially based on the Dell M1000e blade-enclosure and shares some technologies and components. There are also some differences with that system. The M1000e can support an EqualLogicstorage area network that connects the servers to the storage via iSCSI, while the VRTX uses a shared PowerEdge RAID Controller (6Gbit PERC8). A second difference is the option to add certain PCIe cards (Gen2 support) and assign them to any of the four servers.[1][2]

Servers: The VRTX chassis has 4 half-height slots available for Ivy-Bridge based PowerEdge blade servers. At launch the PE-M520 (Xeon E5-2400v2) and the PE-M620 (Xeon E5-2600v2) were the only two supported server blades, however the M520 was since discontinued. The same blades are used in the M1000e but for use in the VRTX they need to run specific configuration, using two PCIe 2.0 mezzanine cards per server. A conversion kit is available from Dell to allow moving a blade from a M1000e to VRTX chassis.

Storage: The VRTX chassis includes shared storage slots that connect to a single or dual PERC 8 controller(s) via switched 6Gbit SAS. This controller which is managed through the CMC allows RAID groups to be configured and then allows for those RAID groups to be subdivided into individual virtual disks that can be presented out to either single or multiple blades. The shared storage slots are either 12 x 3.5' HDD slots or 24 x 2.5' HDD slots depending on the VRTX chassis purchased. Dell offers 12Gbit SAS disks for the VRTX, but these will operate at the slower 6Gbit rate for compatibility with the older PERC8 and SAS switches.

Networking: The VRTX chassis has a built in IOM for supporting ethernet traffic to the server blades. At present the options for this IOM are an 8 port 1Gb pass-through module, a 24 Port 1Gb switch (R2401) and a 20 X 10Gb (+2*1Gb) switch (R2210)[3]. The 8 port pass through module offers 2 pass-through connections to each internal blade slot where the 24 port 1Gb switch option provides 16 internal ports (4 per blade slot) and 8 external ports to be used to uplink to the network. The R2210 has 16 internal, 4 external and two additional 1Gb external ports.The I/O modules used on the VRTX are a different size to the I/O modules of the M1000e, so I/O modules are not compatible between the systems.

Management: A CMC is responsible for the management of the entire system. The CMC is similar to the CMC used in the M1000e chassis. Connection to the CMCs is done via separate RJ45 ethernet connectors.

Power and cooling: The system comes with four PSUs at 110 or 230 V AC. There is no option to use -48 V DC PSUs. Each 1100 watt PSU has a built-in fan. For cooling of the server-modules there are four blower-modules, each containing two fans, and for cooling of the rest of the chassis there are 6 internal fans which can only be reached by opening the chassis. The fans used are the same units as used in the PowerEdge R-720xd rack-server.

KVM: Unlike the M1000e the VRTX doesn't have a separate KVM module, but it is built into the main chassis. The system only supports USB keyboard and mouse. Control of the KVM function is done via the mini LCD screen. These USB ports as well as the 15 pin VGA connector are at the front of the system.

USB: The USB connectors are only for connecting keyboard and mouse; it doesn't support external storage via USB.

LCD: Via the mini-LCD screen at the front of the system you can find status information of the system, configure some basic settings (such as CMC IP address) and manage the built-in KVM switch. The LCD screen functions can be controlled via a 5 button navigation system, similar to the system used on the M1000e.

Serial: A single RS-232 serial communication port is provided at the back of the system. This connector is only used for local configuration to the CMC: it doesn't allow you to use this connector as serial port of a server in the system.

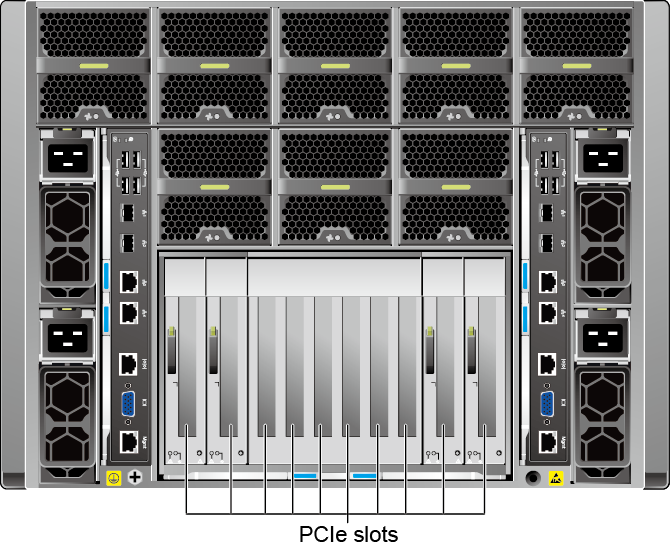

Expansion slots: The system provides space for five PCIe Gen2 (2.0) expansion cards and 3 'full height' PCIe Gen2 (2.0) expansion cards. Via the management controller you can assign each slot to a specific server. You can only assign a PCIe slot to a server when the server is powered off as the PCIe card is recognized and initialized by the server BIOS at startup. Only certain PCIe cards are supported by Dell. Currently, VRTX support is limited to eight different PCIe cards, including six Ethernet NICs (Intel and Broadcom), a 6Git SAS adapter (LSI) and the AMD FirePro™ W7000.[4]

Usage[edit]

The VRTX is targeted at two different user-groups: either local offices of large enterprises where the majority of the IT services are centrally provided via remote datacenters - where the VRTX system provides local functions such as a relative small virtualisation platform to provide locally needed services as VDI workstations, local Exchange or Lync server and local storage facilities. The entire system will normally be managed by the central IT department via the CMC (for the chassis) and some IT manager as SCCM or KACE for the servers running on the system.[5]

The other intended public is the SME market with limited IT requirements. The tower model is designed to run in a normal office environment. Dell claims that the noise level of the VRTX system is very low[1] and can be installed in a normal office environment: there is no need to install the system in a special server-room. It is possible however to convert a tower-VRTX into a rack-mounted VRTX.

Operating systems[edit]

The server blades in a VRTX system (M520/M620/M820) have a different list of supported operating systems than their M1000e counterparts. The operating systems supported to run on the blades are: Windows Server 2008 SP2, Windows Server 2008 R2 SP1, Windows Server 2012, Windows Server 2012 R2, VMware ESX 5.1, and VMware ESX 5.5. Support for running other operating systems is only supported as virtual machines on Hyper-V or ESXi. The main intended and marketed use will be as a system running Hyper-V or ESXi[6]

The supported Hypervisors on the VRTX Chassis are Windows Hyper-V, VMware ESXi 5.1, and VMware ESXi 5.5. At this time other hypervisors, like Citrix Xenserver, are not supported.

At launch no Linux based operating systems were supported, mainly because there was no Linux driver available for the MegaRAID controller for shared storage access.[7]As of June 2014, Linux support for the VRTX Shared PERC 8 was released. This driver supports the single-controller Entry Shared Mode (ESM) configuration, support for the dual-controller High Availability Shared PERC configuration has not been announced. [8]

Announced features[edit]

Although not available at launch in June 2013 Dell has hinted about future improvements and functionality.

These include:

- - (Available as of April 2014) Support for dual PERC (=PowerEdge Raid Controller) for redundancy to internal shared storage slots

- - Support for 10Gb ethernet switch and pass-through modules

- - Support for additional operating systems, mainly Linux based.

See also[edit]

- Dell PowerEdge - main article on Dell PowerEdge server family

- PowerEdge Generation 12 servers

- Power Edge M520 on the M1000e page

- Power Edge M620 on the M1000e page

- CMC - on the M1000e page

References[edit]

- ^ abcChris Preimesberger (7 June 2013). 'Why Dell may have hit home with new VRTX server'. eWeek. Retrieved 30 September 2013.

- ^ ab'Dell PowerEdge VRTX'(PDF). Specification Sheet. Dell. July 8, 2013. Retrieved 30 September 2013.

- ^R2401 & R2210 quick start guide, downloaded 3 July, 2017

- ^'PowerEdge VRTX Technical Guide'(PDF). Dell.com. Dell. Archived from the original(PDF) on 4 March 2016. Retrieved 5 January 2016.

- ^Chris Cowley's BLOG: Dell announces VRTX, 4 June 2013. Visited: 30 June 2013

- ^Dell reference architectures with 3 typical setup's of VRTX: Exchange 2013 on Hyper-v[permanent dead link], Hyper-V 2012 server[permanent dead link] and VRTX as VMware ESXi cluster[permanent dead link], visited: 4 August 2013

- ^ServerWatch.com: Dell debuts VRTX for converged infrastructure, section 'Windows Only'. 5 June 2013. Visited: 30 June 2013

- ^Dell.com: VRTX Shared PERC 8 driver for Linux, 17 Jun 2014. Visited: 15 Jan 2015

The hardware components that a typical server computer comprises are similar to the components used in less expensive client computers. However, server computers are usually built from higher-grade components than client computers. The following paragraphs describe the typical components of a server computer.

Motherboard

The motherboard is the computer’s main electronic circuit board to which all the other components of your computer are connected. More than any other component, the motherboard is the computer. All other components attach to the motherboard.

The major components on the motherboard include the processor (or CPU), supporting circuitry called the chipset, memory, expansion slots, a standard IDE hard drive controller, and input/output (I/O) ports for devices such as keyboards, mice, and printers. Some motherboards also include additional built-in features such as a graphics adapter, SCSI disk controller, or a network interface.

Processor

The processor, or CPU, is the brain of the computer. Although the processor isn’t the only component that affects overall system performance, it is the one that most people think of first when deciding what type of server to purchase. At the time of this writing, Intel had four processor models designed for use in server computers:

- Itanium 2: 1.60GHz clock speed; 1–2 processor cores

- Xeon: 1.83–2.33GHz clock speed; 1–4 processor cores

- Pentium D: 2.66-3.6GHz clock speed; 2 processor cores

- Pentium 4: 2.4-3.6GHz clock speed; 1 processor core

Each motherboard is designed to support a particular type of processor. CPUs come in two basic mounting styles: slot or socket. However, you can choose from several types of slots and sockets, so you have to make sure that the motherboard supports the specific slot or socket style used by the CPU. Some server motherboards have two or more slots or sockets to hold two or more CPUs.

The term clock speed refers to how fast the basic clock that drives the processor’s operation ticks. In theory, the faster the clock speed, the faster the processor. However, clock speed alone is reliable only for comparing processors within the same family. In fact, the Itanium processors are faster than Xeon processors at the same clock speed. The same holds true for Xeon processors compared with Pentium D processors. That’s because the newer processor models contain more advanced circuitry than the older models, so they can accomplish more work with each tick of the clock.

The number of processor cores also has a dramatic effect on performance. Each processor core acts as if it’s a separate processor. Most server computers use dual-core (two processor cores) or quad-core (four cores) chips.

Memory

Don’t scrimp on memory. People rarely complain about servers having too much memory. Many different types of memory are available, so you have to pick the right type of memory to match the memory supported by your motherboard. The total memory capacity of the server depends on the motherboard. Most new servers can support at least 12GB of memory, and some can handle up to 32GB.

Hard drives

What Are Slots On A Server

Most desktop computers use inexpensive hard drives called IDE drives (sometimes also called ATA). These drives are adequate for individual users, but because performance is more important for servers, another type of drive known as SCSI is usually used instead. For the best performance, use the SCSI drives along with a high-performance SCSI controller card.

Recently, a new type of inexpensive drive called SATA has been appearing in desktop computers. SATA drives are also being used more and more in server computers as well due to their reliability and performance.

Network connection

The network connection is one of the most important parts of any server. Many servers have network adapters built into the motherboard. If your server isn’t equipped as such, you’ll need to add a separate network adapter card.

Video

Fancy graphics aren’t that important for a server computer. You can equip your servers with inexpensive generic video cards and monitors without affecting network performance. (This is one of the few areas where it’s acceptable to cut costs on a server.)

Power supply

Because a server usually has more devices than a typical desktop computer, it requires a larger power supply (300 watts is typical). If the server houses a large number of hard drives, it may require an even larger power supply.